Co-Author: Sushant Paudyal

This blog contains information that will give knowledge to the reader on how to scrape a PDF file using Amazon Textract and store the extracted data in DynamoDB.

Overview

Learn the steps to be taken in carrying out a PDF Scraping solution using Amazon Textract.\

Requirement

From various PDFs, only some information must be extracted and stored in a database.

Goals

- To extract contents from PDF using Amazon Textract

- To split multi-page PDFs into multiple files

- To achieve a fully automated system

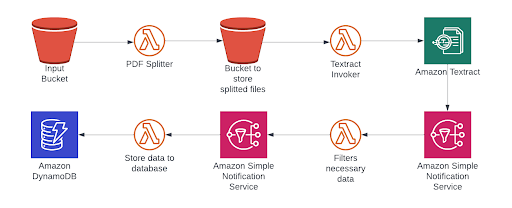

Architecture diagram

IMPORTANT!!! The Input Bucket and Bucket to store splitted files should be DIFFERENT buckets !!! IMPORTANT

Working Methodology

- Take the PDF and split it into individual pages

- Pass the pdfs to AWS Textract

- Run concurrent lambda to extract necessary information from each page

- Store extracted data in the database

Procedure

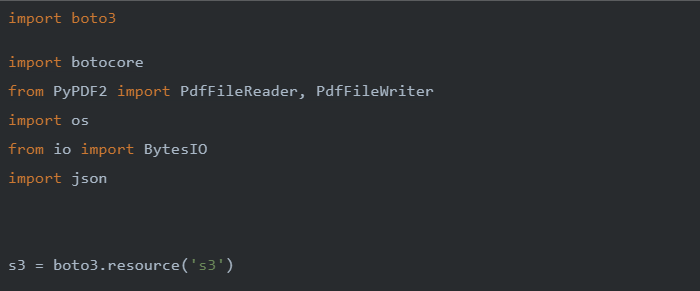

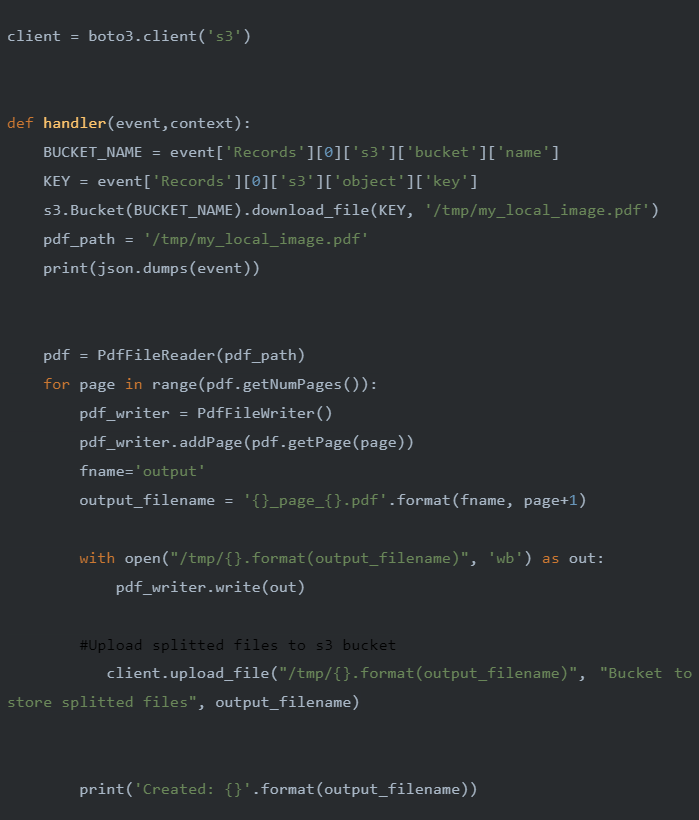

1. Take the PDF and split it into individual pages

As shown in the architecture diagram above, an input bucket is created to store the original PDF file. A lambda function ‘PDF Splitter’ is created and it is configured so as to be triggered from S3. An ObjectCreatedPut Event trigger is set up for the ‘PDF splitter’ lambda function with the S3 bucket set as the input bucket. The lambda function’s runtime is selected as Python 3.8 and various libraries like boto3, PyPDF2, io, os, etc are used to execute the required task. The split files are then stored in another bucket ‘Bucket to store split files’

IAM Permissions: AmazonS3FullAccess, BasicLambdaExecutionRole

Code:

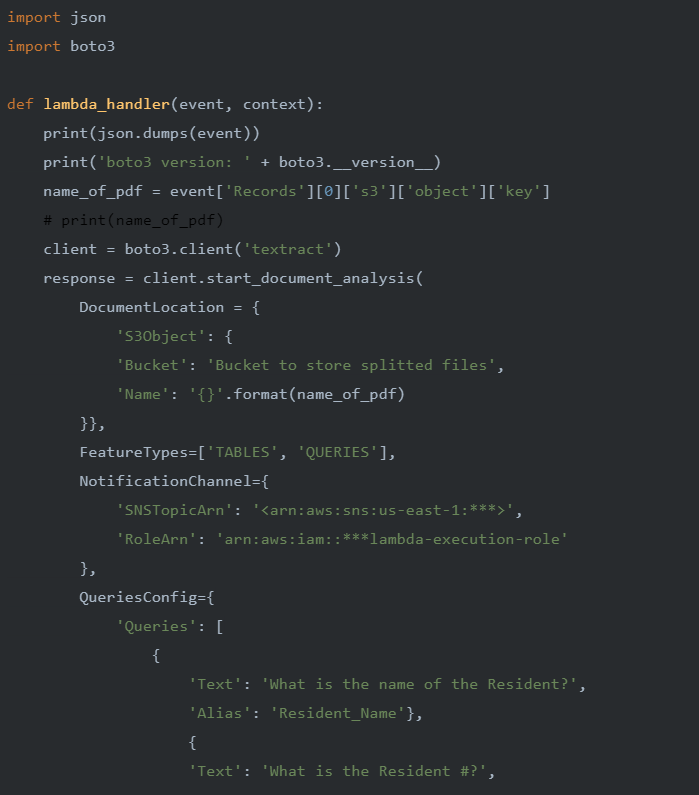

2. Pass the pdfs to AWS Textract

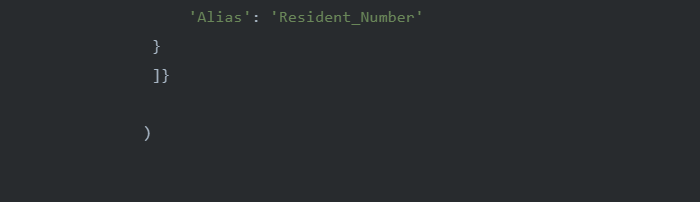

Now, another lambda function ‘Textract Invoker’ is created and is triggered by the S3 bucket ‘Bucket to store split files’. This function starts the document analysis process using Amazon Textract and sends notifications using SNS as a Notification channel. Amazon Textract feature types like Tables and Queries are defined and the Queries are configured so as to extract the required information.

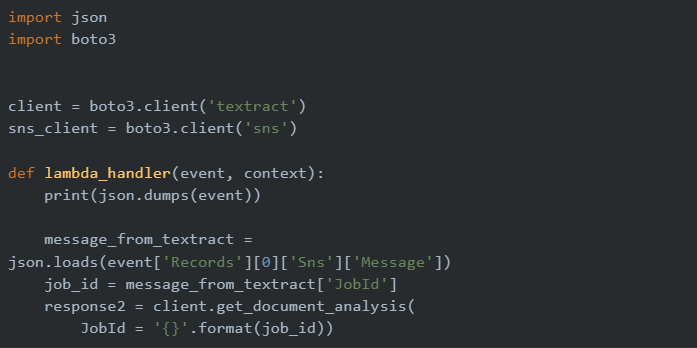

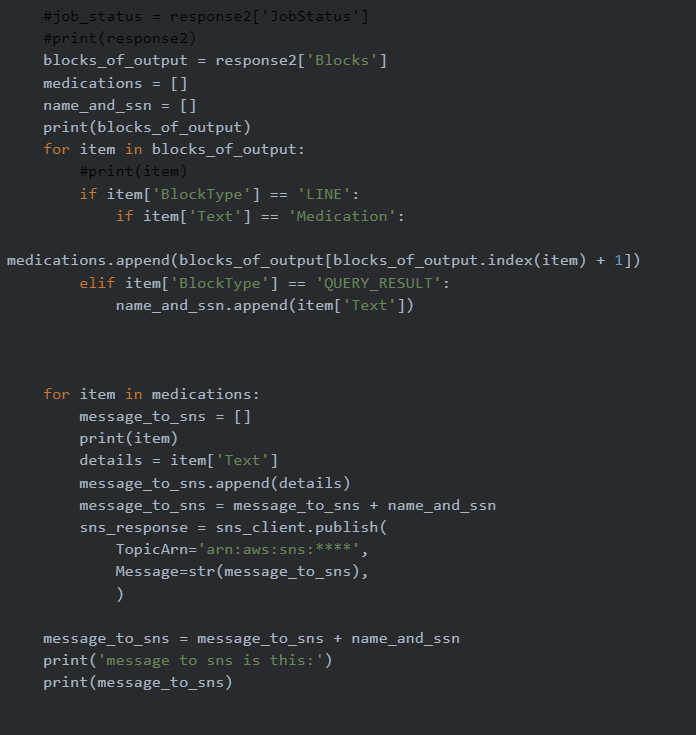

3. Run concurrent lambda to extract necessary information from each page

The SNS then triggers the lambda function ‘Filters necessary data’ to concurrently process all the documents and extract necessary data from them. This function then publishes the information to Amazon SNS topic.

Permissions: SNSFullAccess, BasicLambdaExecutionRole

Code:

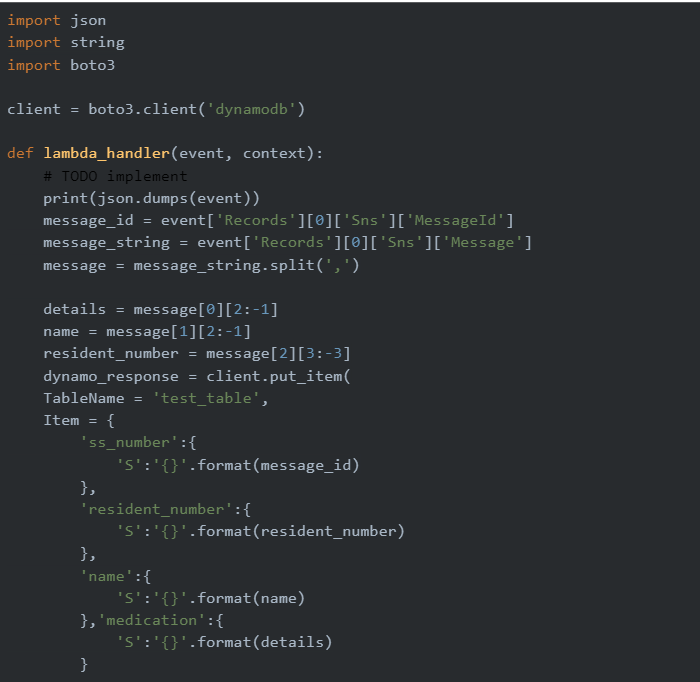

4. Store extracted data in the database

When the message is published to Amazon SNS topic, the final lambda function ‘store to database’ is triggered. This function gets the message and puts the necessary item to dynamodb using client.put_item() method. The items put are name, resident number and details.

Roles: DynamoDbFullAccess

Code:

Result:

The pdf is processed using Amazon Textract and the required data are inserted into the database table.

Monitoring:

- Cloudwatch logs provide various information regarding various events during the execution of the system.